The AI Ear for Cetacean Chatter:

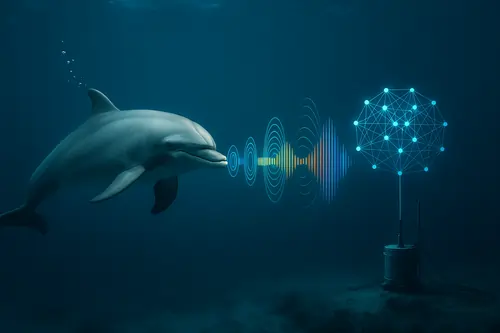

Dolphin have been having discussions for centuries in the vast, deep expanse of our seas. Whistles, clicks, and a symphony of underwater sounds help them talk with one another—language as sophisticated as it is hard to define. Though marine biologists have always been intrigued by the vocalizations of these animals, decoding dolphin chatter is among the major difficulties of science. In a amazing mix of technology and nature, Google's AI research branch has created a model meant to interpret cetacean sounds by now. Yes, artificial intelligence is listening to dolphins.

This discovery has great consequences for our basic knowledge of communication beyond the human sphere, animal behavior research, and wildlife preservation. In this post, we will cover how Google's AI functions, what it has accomplished, why it is important, and what it could imply for our future ties to the animal world.

Why Dolphins?

First off, it's worth thinking why, in linguistics and artificial intelligence, dolphins are such fascinating research targets of study before getting into the technology. Known for their astounding intelligence, social nature, and vocal gifts, dolphins fit in the cetacean order with whales and porpoises. Their ability to produce several kinds of sound—pulses, whistles, bursts—that help navigating, hunting, and social communication is gone.

Some scientists contend even that dolphins have something comparable to names: signature whistles particular to every individual, which their friends use to phone them specifically. It's this complex sound behavior that has made dolphins a decadeslong research interest among marine biologists, neuroscientists, and language researchers.

But trying to express this in words people will understand has proven to be very challenging. The frequency, speed, and context specific character of dolphin sounds have made most conventional analysis methods obsolete. Input Artificial Intelligence.

The Rise of Ai in Animal Communication Studies

Old as it is, using artificial intelligence to decode animal messages is still something. AI models have been used for bird songs, elephant rumbles, and even honeybee dances over the last ten years. AI's power is in its capacity to analyze huge quantities of data and find patterns not discernible by the human eye or ear.

For seeking to translate sperm whale communication using artificial intelligence technologies, the Cetacean Translation Initiative (CETI) grabbed attention in 2022. Google's AI scientists have now been inspired by such initiatives to focus on dolphins, perhaps the most verbal of all sea mammals.

Google's AI Model: The Dolphin "AI Ear"

Google's dolphin initiative centers on machine learning at its heart, a component of artificial intelligence that teaches algorithms to identify data patterns. The process started by collecting a large amount of dolphin sound records. Marine biologists, underwater microphones (hydrophones), and acoustic sensors positioned in dolphin populated areas over many years together gathered these recordings.

The next stage will be to provide the audio files to a deep learning model, an artificial intelligence category that employs neural networks to mirror the processing capabilities of the human brain. The model learned to:

1. Identify and classify different dolphin vocalizations—whistles, clicks, burst pulses—that.

2. Cluster similar sounds together to detect repeating patterns.

3. Associate specific sounds with observed behaviors (such as

navigation, feeding, or social contacts)

The self-supervised learning method employed in this project is what sets it apart. This implies the artificial intelligence might learn directly from the audio data without first needing all to be manually labeled—something of great value when working with complex natural phenomena where labels are few or subjective.

Furthermore, the AI model was tuned to discern sounds of ultrahigh frequency—far surpassing human hearing range. Dolphins converse in frequencies spanning from 20 kHz to more than 150 kHz, well beyond our hearing range. Analyzing these frequencies helped Google's AI to reveal levels of communication hitherto unappreciable.

Main Results: Are We Hearing Dolphin "Words"?

Thus, what did the artificial intelligence reveal?

well, it picked out particular acoustical patterns associated with several activities. As an instance, it observed that particular whistle types consistently happened during group meetings; others notated click trains related with cooperative hunting. Some of these trends were too subtle or rapid for conventional analysis techniques to detect.

More tantalizingly still, the AI started grouping repeated sound sequences that might represent organized communication—essentially the foundation of a dolphin "language." These clusters suggest a sophisticated system of vocal labels, calls, and reactions among dolphins in their organization somewhat similar to linguistic syntax.

One especially interesting find was the regular matching of particular whistle patterns with particular social situations including play, confrontation, or greetings. This would indicate that dolphins could transmit more than straightforward signaling using vocal "phrases" or "sentences."

The scientists are fast to point out, though: not yet we are rendering dolphin discussion into English. While the AI is not giving clear semantic content (such as "Let's go hunting" or "Watch out for that shark"), it is showing an orderly, regular pattern in dolphin communication — a fundamental first stage.

Why This is Important: Beyond Scientific Knowledge

Though decoding dolphin remarks might appear unusual, the consequences are significant and broad ranging. This is why it is significant:

1. Wildlife conservation

Conservationists can use knowledge of animal communication to track species' behavior and wellbeing in their natural habitat. Acknowledging signs of stress, mating calls, or territorial conflicts helps scientists more effectively protect habitats and control interactions with human activities such as shipping and fishing.

2. Animal welfare in captivity

AI driven acoustic monitoring in marine parks and aquariums will offer insight on the psychological and emotional state of dolphins. By observing alterations in vocal behavior to recognize indications of anxiety or boredom, one might enhance their care approaches.

3. Advancing Ai models

Google's bioacoustics research stretches the limits of artificial intelligence power to analyze sophisticated, high dimensional data. From medical diagnostics to speech recognition and beyond, these developments could be used in different sectors.

4. Implications Philosophical and ethical.

Decoding dolphin idiom begs serious ethical issues on animal rights, sentience, and our responsibility toward other intelligent species. Understanding what dolphins are saying—if they have complex inner life—challenges us to rethink our perception of and behavior toward them.

What's Next: The Path to Interspecies Communication

Though hardly only the stuff of science fiction, the vision of having a "conversation" with a dolphin remains far off. More advanced models that might one day not only classify dolphin sounds but also place them into context have had the basis set by Google's AI.

Future investigations may well link video data of dolphin behavior with acoustic recordings to allow artificial intelligence to coordinate body language and vocalizations in actual time. Improvements in subaquatic acoustic technology will also increase the amount and quality of available data.

Furthermore, crucial will be partnerships among technology companies such Google and marine research institutions. Although artificial intelligence can analyze the data, professional marine biologists are needed to validate and interpret the results in the context of animal behavior science.

The Broader Perspective: AI as a tool for nature

Google's dolphin project falls under a more general pattern of applying artificial intelligence to conserve and learn the natural world. From studying weather information to monitoring endangered species and mapping ecosystems, AI is starting to become one of the most effective instruments in the conservationist's arsenal.

This change signifies a intriguing reversal: for many years, technology was viewed as a power that distanced people from nature. Now it's evolving into a bridge means to reach the other people of our planet in ways never before conceivable.

With dolphins, artificial intelligence might at last allow us to see into the minds of these amazing sea creatures, therefore bringing us closer to answering one of mankind's most ancient riddles: are we really alone in our ability for thought, language, and emotion?

Final Reflections: Listening to the Ocean

A technology emerging from human inventiveness learning to pay attention to the language of another species has a poetic quality. Project like Google's AI dolphin translator remind us of the importance of humility and curiosity in a world where so much of nature's knowledge has gone unnoticed or misinterpreted.

Write your comment